Winning a Kaggle Competition in Python - Part 1

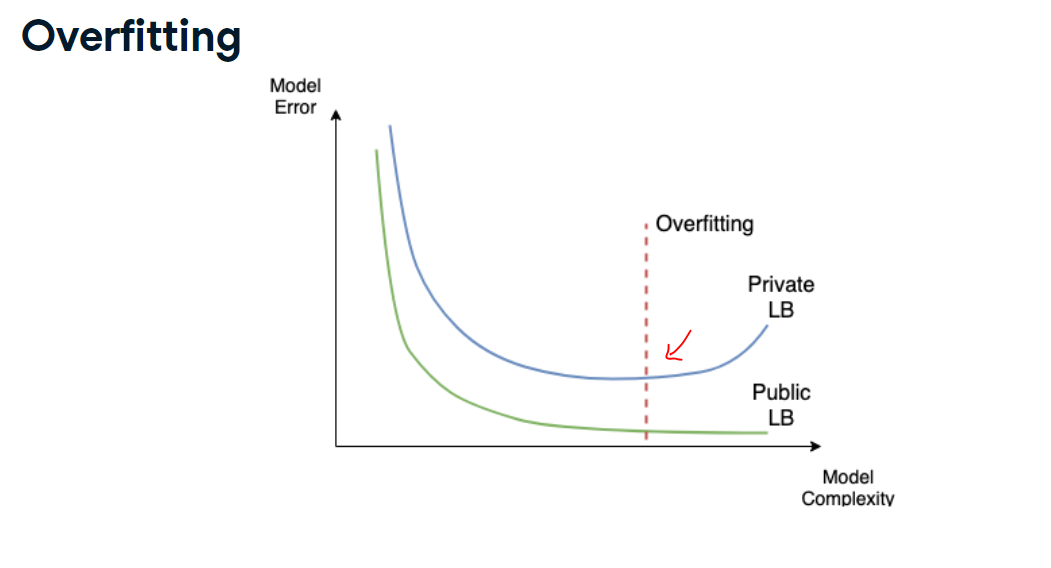

In this first chapter, you will get exposure to the Kaggle competition process. You will train a model and prepare a csv file ready for submission. You will learn the difference between Public and Private test splits, and how to prevent overfitting.

Explore Train Data

You will work with another Kaggle competition called "Store Item Demand Forecasting Challenge". In this competition, you are given 5 years of store-item sales data, and asked to predict 3 months of sales for 50 different items in 10 different stores.

To begin, let's explore the train data for this competition. For the faster performance, you will work with a subset of the train data containing only a single month history.

Your initial goal is to read the input data and take the first look at it.

Instructions:

- Import pandas as pd.

- Read train data using pandas' read_csv() method.

- Print the head of the train data (using head() method) to see the data sample.

import pandas as pd

# Read train data

train = pd.read_csv('datasets/demand_forecasting_train_1_month.csv')

# Look at the shape of the data

print('Train shape:', train.shape)

# Look at the head() of the data

display(train.head())

Congratulations, you've gotten started with your first Kaggle dataset! It contains 15,500 daily observations of the sales data.

Explore test data

Having looked at the train data, let's explore the test data in the "Store Item Demand Forecasting Challenge". Remember, that the test dataset generally contains one column less than the train one.

This column, together with the output format, is presented in the sample submission file. Before making any progress in the competition, you should get familiar with the expected output.

That is why, let's look at the columns of the test dataset and compare it to the train columns. Additionally, let's explore the format of the sample submission. The train DataFrame is available in your worksp

Instructions:

- Read the test dataset.

- Print the column names of the train and test datasets.

- Notice that test columns do not have the target "sales" column. Now, read the sample submission file.

- Look at the head of the submission file to get the output format.

import pandas as pd

# Read the test data

test = pd.read_csv('datasets\demand_forecasting_test.csv')

# Print train and test columns

print('Train columns:', train.columns.tolist())

print('Test columns:', test.columns.tolist())

# Read the sample submission file

sample_submission = pd.read_csv('datasets\sample_submission.csv')

# Look at the head() of the sample submission

display(sample_submission.head() )

The sample submission file consists of two columns: id of the observation and sales column for your predictions. Kaggle will evaluate your predictions on the true sales data for the corresponding id. So, it’s important to keep track of the predictions by id before submitting them. Let’s jump in the next lesson to see how to prepare a submission file!

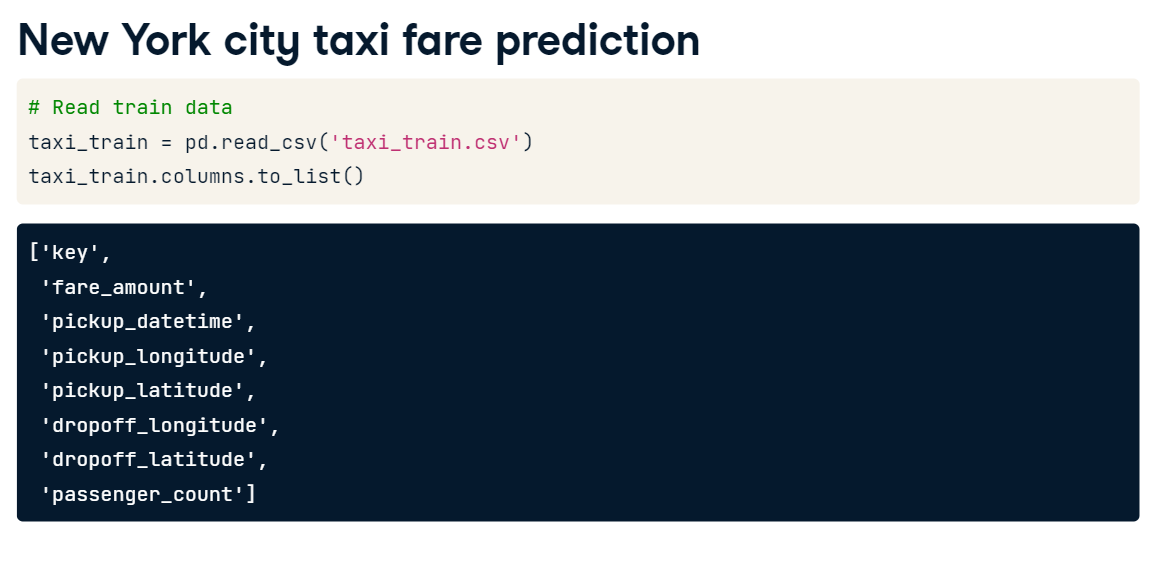

import pandas as pd

# Read train data

taxi_train = pd.read_csv('datasets/taxi_train_chapter_4.csv')

taxi_train.columns.to_list()

# Read test data

taxi_test = pd.read_csv('datasets/taxi_test_chapter_4.csv')

taxi_test.columns.to_list()

'fare_amount' column is missing in test data because this is the column that we are predicting.

- Problem Type

import matplotlib.pyplot as plt

# plot a histogram

taxi_train.fare_amount.hist(bins=30, alpha= 0.5)

plt.show()

As we see the fare_amount is a continued value, so we are dealing with the Regression problem.

- Build a model:

from sklearn.linear_model import LinearRegression

lr = LinearRegression()

feature_cols = [

'pickup_longitude',

'pickup_latitude',

'dropoff_longitude',

'dropoff_latitude',

'passenger_count'

]

lr.fit(X=taxi_train[feature_cols], y=taxi_train['fare_amount'])

# make predictions on the test data

taxi_test['fare_amount'] = lr.predict(taxi_test[feature_cols])

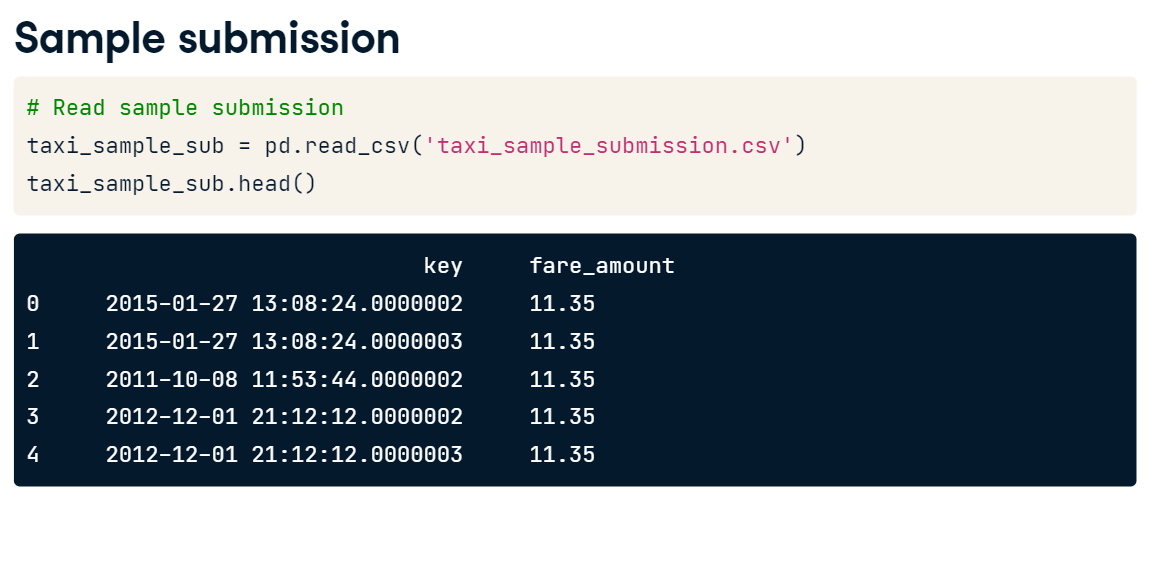

taxi_sample_sub = pd.read_csv('datasets/taxi_sample_submission.csv')

taxi_sample_sub.head()

taxi_test.head()

taxi_submission = taxi_test[['key','fare_amount']]

# save to .csv

taxi_submission.to_csv('first_sub.csv', index=False)

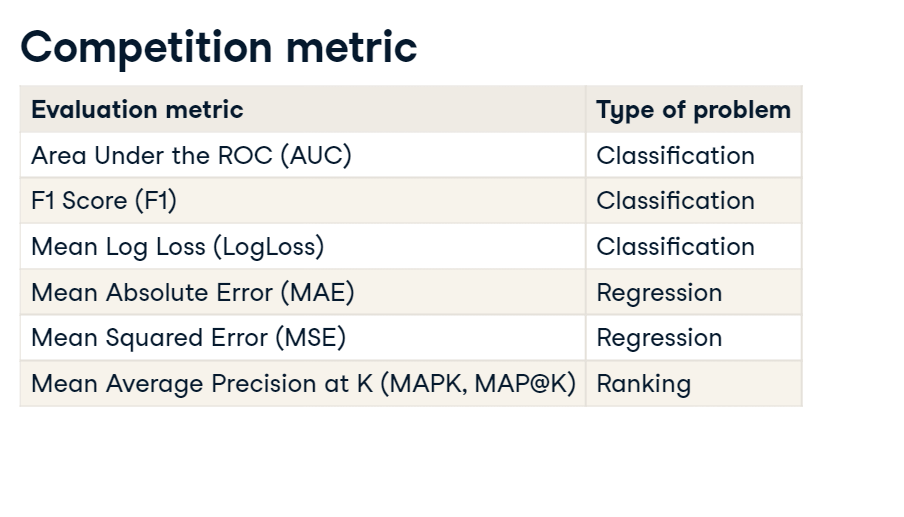

Determine a problem type

You will keep working on the Store Item Demand Forecasting Challenge. Recall that you are given a history of store-item sales data, and asked to predict 3 months of the future sales.

Before building a model, you should determine the problem type you are addressing. The goal of this exercise is to look at the distribution of the target variable, and select the correct problem type you will be building a model for.

The train DataFrame is already available in your workspace. It has the target variable column called "sales". Also, matplotlib.pyplot is already imported as plt.

train.sales.hist(bins=30, alpha= 0.5)

plt.show()

That's correct! The sales variable is continuous, so you're solving a regression problem.

Train a simple model

As you determined, you are dealing with a regression problem. So, now you're ready to build a model for a subsequent submission. But now, instead of building the simplest Linear Regression model as in the slides, let's build an out-of-box Random Forest model.

You will use the RandomForestRegressor class from the scikit-learn library.

Your objective is to train a Random Forest model with default parameters on the "store" and "item" features.

Instructions:

- Read the train data using pandas.

- Create a Random Forest object.

- Train the Random Forest model on the "store" and "item" features with "sales" as a target.

import pandas as pd

from sklearn.ensemble import RandomForestRegressor

# Create a Random Forest object

rf = RandomForestRegressor()

# Train a model

rf.fit(X=train[['store', 'item']], y=train['sales'])

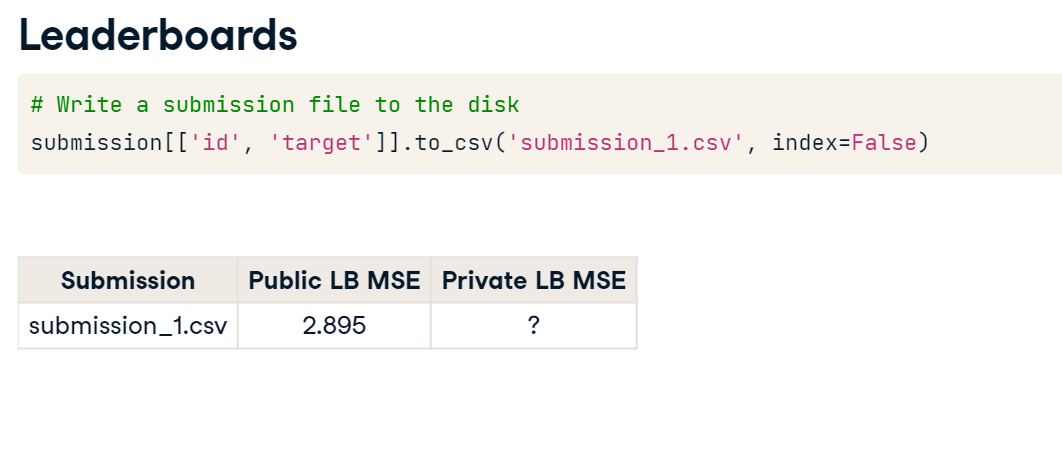

Prepare a submission

You've already built a model on the training data from the Kaggle Store Item Demand Forecasting Challenge. Now, it's time to make predictions on the test data and create a submission file in the specified format.

Your goal is to read the test data, make predictions, and save these in the format specified in the "sample_submission.csv" file. The rf object you created in the previous exercise is available in your workspace.

Note that starting from now and for the rest of the course, pandas library will be always imported for you and could be accessed as pd.

Instructions:

- Read "test.csv" and "sample_submission.csv" files using pandas.

- Look at the head of the sample submission to determine the format.

- Note that sample submission has id and sales columns. Now, make predictions on the test data using the rf model, that you fitted on the train data.

- Using the format given in the sample submission, write your results to a new file.

test = pd.read_csv("datasets/taxi_test_chapter_4.csv")

sample_submission = pd.read_csv("datasets/sample_submission.csv")

# Show the head() of the sample_submission

display(sample_submission.head())

test['sales'] = rf.predict(test[['store', 'item']])

# Write test predictions using the sample_submission format

test[['id', 'sales']].to_csv('kaggle_submission.csv', index=False)

Congratulations! You've prepared your first Kaggle submission. Now, you could upload it to the Kaggle platform and see your score and current position on the Leaderboard. Move forward to learn more about the Leaderboard itself!

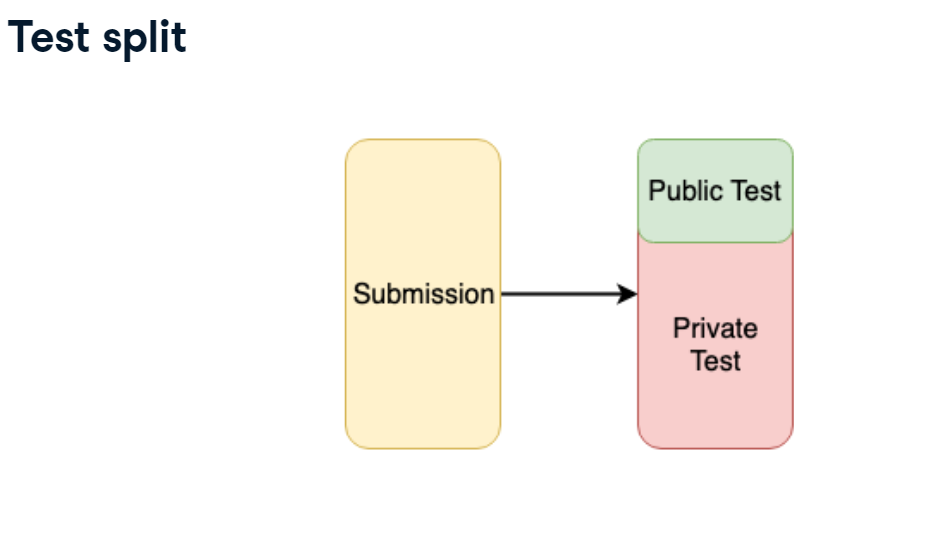

Public vs Private Leaderboard

Prepare a submission

You've already built a model on the training data from the Kaggle Store Item Demand Forecasting Challenge. Now, it's time to make predictions on the test data and create a submission file in the specified format.

Your goal is to read the test data, make predictions, and save these in the format specified in the "sample_submission.csv" file. The rf object you created in the previous exercise is available in your workspace.

Note that starting from now and for the rest of the course, pandas library will be always imported for you and could be accessed as pd.

Instructions:

- Note that sample submission has id and sales columns. Now, make predictions on the test data using the rf model, that you fitted on the train data.

- Using the format given in the sample submission, write your results to a new file.

test = pd.read_csv('./datasets/test.csv')

sample_submission = pd.read_csv('./datasets/sample_submission.csv')

# Show the head() of the sample_submission

display(sample_submission.head())

# Get predictions for the test set

test['sales'] = rf.predict(test[['store', 'item']])

# Write test predictions using the sample_submission format

test[['id', 'sales']].to_csv('kaggle_submission.csv', index=False)

Train XGBoost models

Every Machine Learning method could potentially overfit. You will see it on this example with XGBoost. Again, you are working with the Store Item Demand Forecasting Challenge. The train DataFrame is available in your workspace.

Firstly, let's train multiple XGBoost models with different sets of hyperparameters using XGBoost's learning API. The single hyperparameter you will change is:

max_depth - maximum depth of a tree. Increasing this value will make the model more complex and more likely to overfit. Instructions:

- Set the maximum depth to 2. Then hit Submit Answer button to train the first model.

- Now, set the maximum depth to 8. Then hit Submit Answer button to train the second model.

- Finally, set the maximum depth to 15. Then hit Submit Answer button to train the third model.

df_full = pd.read_csv('datasets/train.csv')

# test = pd.read_csv('datasets/test.csv')

# Randomly use 30000 of your dataframe

df = df_full.sample(n=30000, random_state=1)

print(df.info())

from sklearn.model_selection import train_test_split

train, test = train_test_split(df, test_size=0.5, random_state=42)

import xgboost as xgb

# Create DMatrix on train data

dtrain = xgb.DMatrix(data=train[['store', 'item']],

label=train['sales'])

# dtest = xgb.DMatrix(data=test[['store', 'item']])

# Define xgboost parameters

params = { 'objective': 'reg:linear',

'max_depth': 2,

# 'max_depth': 8,

# 'max_depth': 15,

'verbosity': 0}

# Train xgboost model

xg_depth_2 = xgb.train(params=params, dtrain=dtrain)

# Define xgboost parameters

params = { 'objective': 'reg:linear',

#'max_depth': 2,

'max_depth': 8,

# 'max_depth': 15,

'verbosity': 0}

# Train xgboost model

xg_depth_8 = xgb.train(params=params, dtrain=dtrain)

# Define xgboost parameters

params = { 'objective': 'reg:linear',

#'max_depth': 2,

# 'max_depth': 8,

'max_depth': 15,

'verbosity': 0}

# Train xgboost model

xg_depth_15 = xgb.train(params=params, dtrain=dtrain)

# Define xgboost parameters

params = { 'objective': 'reg:linear',

#'max_depth': 2,

# 'max_depth': 8,

'max_depth': 30,

'verbosity': 0}

# Train xgboost model

xg_depth_30 = xgb.train(params=params, dtrain=dtrain)

Explore overfitting XGBoost

Having trained 3 XGBoost models with different maximum depths, you will now evaluate their quality. For this purpose, you will measure the quality of each model on both the train data and the test data. As you know by now, the train data is the data models have been trained on. The test data is the next month sales data that models have never seen before.

The goal of this exercise is to determine whether any of the models trained is overfitting. To measure the quality of the models you will use Mean Squared Error (MSE). It's available in sklearn.metrics as mean_squared_error() function that takes two arguments: true values and predicted values.

train and test DataFrames together with 3 models trained (xg_depth_2, xg_depth_8, xg_depth_15) are available in your workspace.

Instructions

- Make predictions for each model on both the train and test data.

- Calculate the MSE between the true values and your predictions for both the train and test data.

from sklearn.metrics import mean_squared_error

dtrain_ = xgb.DMatrix(data=train[['store', 'item']])

dtest = xgb.DMatrix(data=test[['store', 'item']])

print(len(train), len(test))

# For each of 3 trained models

for model in [xg_depth_2, xg_depth_8, xg_depth_15,xg_depth_30]:

# Make predictions

train_pred = model.predict(dtrain_)

test_pred = model.predict(dtest)

# Calculate metrics

mse_train = mean_squared_error(train['sales'], train_pred)

mse_test = mean_squared_error(test['sales'], test_pred)

display('MSE Train: {:.3f}. MSE Test: {:.3f}'.format(mse_train, mse_test))